One of the perks of my job is that I get to work with some incredible partners. One of those is Confluent, probably best known for being the primary maintainer of the Apache Kafka project.

This year, Confluent is doing a multi-city “Data In Motion” tour. The name comes from a focus on real-time data processing. Modern applications often have a requirement to collect data from one or more sources, enrich it and then use the enriched data to provide useful information to the end user, usually in real-time. This tour was a half-day seminar exploring some solutions to that use case.

The event was held at a place called Boxyard RTP. It has been many years since I worked in the Research Triangle Park and it has really grown in that time. Boxyard is made up of repurposed shipping containers. There are restaurants, a bar, a stage (when I walked up a band was playing) and for the purposes of this seminar there was an area on the second level with a conference room and a patio.

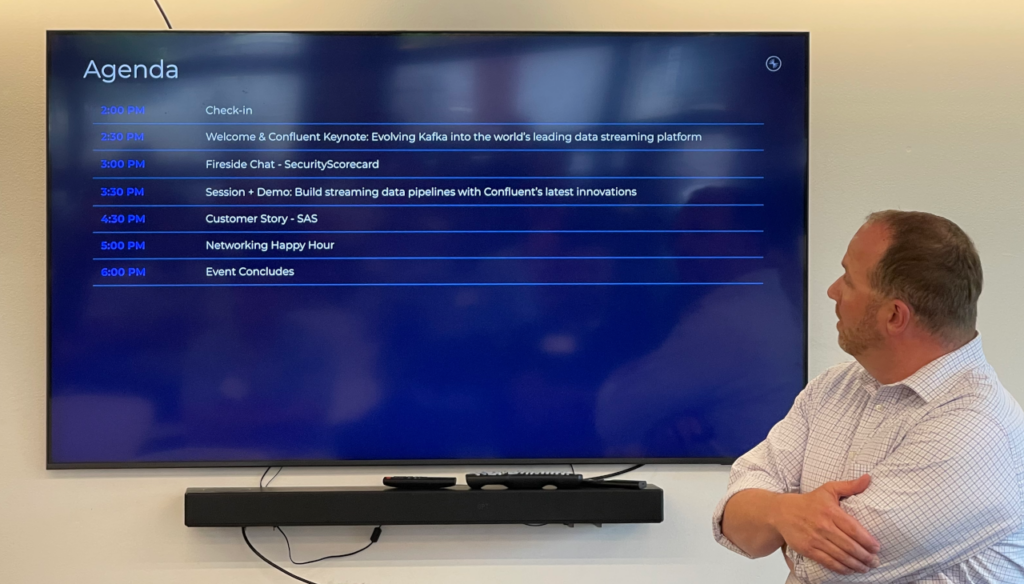

The agenda consisted of four main items: an overview of what is going on with Confluent’s offerings, a “fireside chat” about using real-time data for security, an hour-long demo of new functionality and a customer success story.

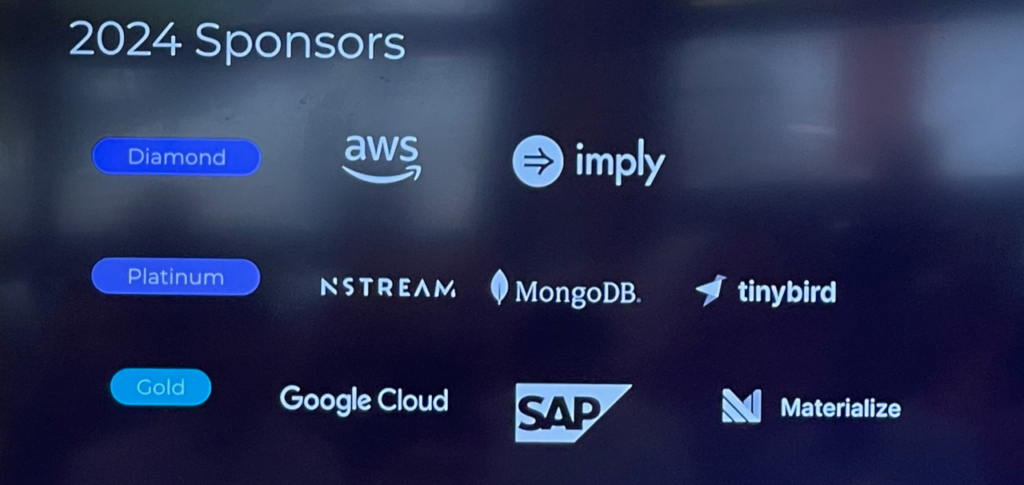

It was cool to see that AWS was a Diamond Sponsor of this event.

The first presenter was Ananda Bose, who is the Director of Solutions Engineering at Confluent. He covered some of the new products available from Confluent, especially Kora. Kora is a cloud native implementation of Apache Kafka.

At my previous company we wanted to be able to offer our technology as a managed service, which was difficult since it was a monolithic Java application. The ultimate goal was to have a cloud native version, and by that I mean a version of the application that can take advantage of cloud technologies that provide resilience and automatic scalability. Apache Kafka is also a Java app and a lot of work must have gone in to decoupling the storage, identity management, metrics and other aspects of the program to fit in the cloud native paradigm.

One thing I liked about Ananda’s presentation style was that he was very direct. Confluent has just completed an integration with Apache Flink, which is a stream processing framework. One thing that Flink brings is ANSI-compliant SQL. Prior to this integration people used KSQL, but the words that Ananda used to describe KSQL are not really appropriate for this family-friendly blog. (grin)

Kora reinforces something I’ve been saying about open source for some time. When it comes to open source software, people are willing to pay for three things: simplicity, stability and security. Kora does all three and the design of Kora even won the “Best Industry Paper” award at last year’s Very Large Databases conference.

We would see Kora and Flink in action in the demo section.

The second talk was a fireside chat between Ananda and Dr. Jared Smith. Jared works at SecurityScorecard, a security risk mitigation company.

SecurityScorecard has to consume petabytes of data in order to detect malicious behavior on the network. In the way their system works, the payload of a given message may be 20 megabytes or larger, and when they used RabbitMQ it simply couldn’t handle the workload. When they switched to Kora their scaling issues went away.

One cool story Jared told happened during the start of the war in Ukraine. SecurityScorecard placed a “honeypot” server in Kyiv and it was able to detect a large Russian botnet attacking the network. They were able to collect and block the IP addresses of the bots and thus mitigate the damage.

The next hour was taken up by a demo. ChunSing Tsui, a Senior Solutions Engineer, walked us through an example using Confluent Cloud, MongoDB and Terraform. The whole demo is available on Github if you’d like to recreate it on your own.

In this example, a shoe store called HappyFeet wants to monitor website traffic to identify customers who visit but don’t stay on the site very long. Then they could use this information to try and re-engage with them through a marketing campaign offering discounts, etc.

While I am in no way an expert at this stuff, it was engaging. There were four data sources that would be processed to provide an enriched data stream to MongoDB tables. What I did like about it is that the heart of the demo was all written in SQL.

As an “old” I am not as up to date on the new hotness in cloud computing as I would like to be, but SQL I know. This was a product that took a difficult concept and made it accessible.

The final presentation was a customer success story from SAS Institute. It was given by Bryan Stines, Director of Product Management for the SAS Cloud, and Justin Dempsey, a Senior Manager for SAS Cloud Innovation and Automation Services.

It was a nice close to the meeting as the “Data In Motion” theme was very present here. One of the products SAS provides involves fraud detection for credit and debit card transactions. When a person swipes or taps a credit card, that sets off a series of events to detect fraud that may involve numerous checks. This has to be done on the order of milliseconds.

Now I am a big open source software enthusiast, but free software doesn’t mean free solution. With my previous project we used technology such as Apache Kafka, Apache Cassandra, PostgreSQL and others. Our users had to either acquire or develop some of that expertise in house or they needed to find a partner, and that was the issue facing SAS. By partnering with Confluent they were able to get the most out of the software from the people who knew it best.

I no longer live that close to RTP but I felt the three hour round trip was worth it for this event. There are still several dates on the calendar so if this interests you, please check it out.